The state of CRON in 2022

An observation for how running programs on a schedule, in a reliable and modern way is neither easy nor simple in 2022. It always involves some sort of sysadmin know how or third party tooling, and it is still fragile.

Most developers will agree that one of the big goals of programming is to automate things. An automation is nothing more than a series of steps that are executed by a machine when given a set of inputs.

There is a lot of information available for how one can automate things by writing code. One such example is the great book "Automate the boring stuff with Python" written by Al Sweigart (huge fan!).

Things are however not that straightforward when the goal is to run an automation in an automated way, especially when the specific automation script should be updated every now and then. Being a developer for a while now, I cannot think of something more boring than making sure something runs automatically, it's tedious and doesn't involve any intellectual challenge (except in Factorio, love that one!). Assembly lines are mostly automated today, press a button, wait a bit, and there's a car.

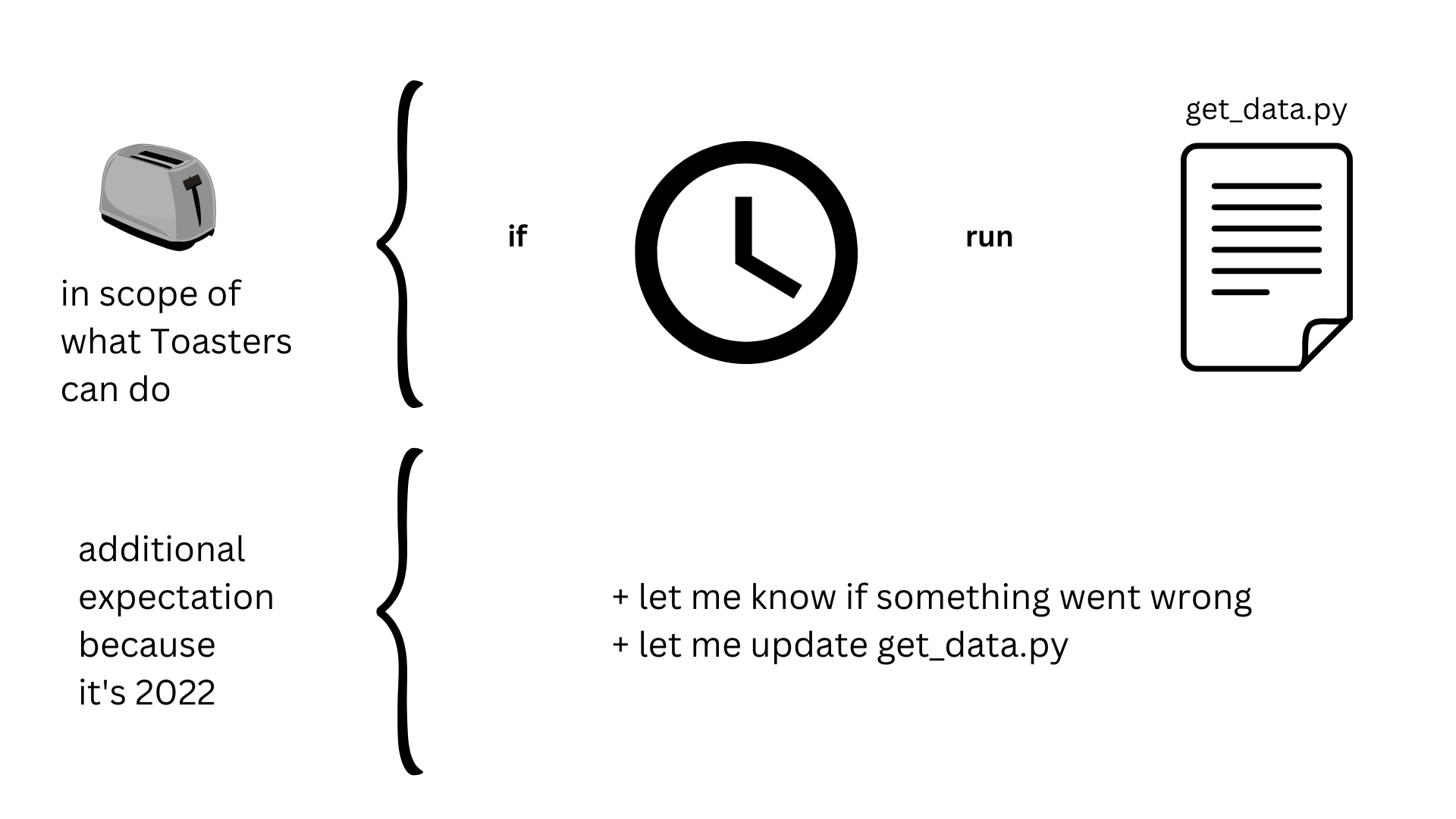

Let's take the example of the CRON job, which is usually referred to in the industry as some kind of task that is run periodically at fixed times, dates, or intervals. In order to drive this point home, assume we have some code we wrote in Python get_data.py that has a dependency to an external library (requests or something like that) and connects to a database to write a few results before exiting. On our development machine it runs perfectly fine. We now want to run this code every day at 18:00 CEST, get notified when it failed and be able to update it every now and then. What we absolutely do not want is to couple any line of that code to an automation infrastructure or anything else for that matter.

How would you approach this seemingly evident task? What are the tools you'd use for this? How would you think someone starting out with programming would approach this simple requirement?

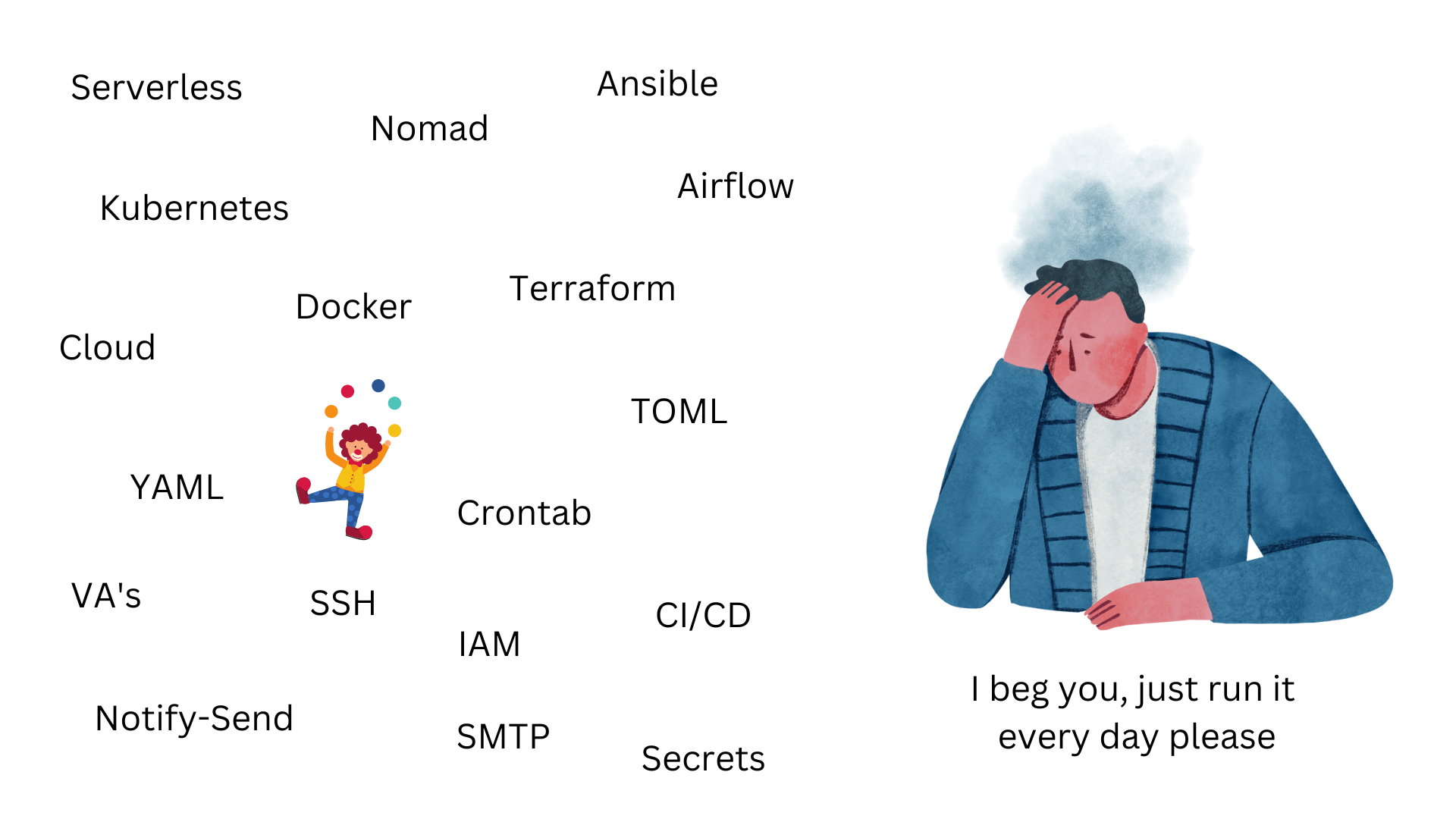

I asked this question a lot during the past months and most of the answers were a mix of:

- Some dockerizing step (or Podmanizing, whatever you like more) of the application

- Use github/gitlab actions coupled with CI/CD to run on their hosted instances and juggle some YAML

- Use some sort of hosted orchestrator (Kubernetes, Nomad, Airflow...), write a Yaml (or a ✨ DAG ✨) for the job, connect it to your docker registry and write logs somewhere accessible, collect logs in Prometheus and add alerts in Grafana and juggle some YAML, TOML, the secrets, volume mounts and networking

- Use a serverless cloud offering and learn how to use and configure their scheduler. Many involve having to build a light API wrapper around the application that accepts HTTP requests, but still requires docker, some CI/CD and more

- Rent a single VPS and add the job through crontab -e, configure the mail server to send you some logs per email (or Telegram) if the task errors out and each time you want to update the code, log into the machine and update the script with vim (if you manage to exit the latter) or pull it from Git/Docker, do it all again for the next update and hope you remember the machine's configuration and custom bash scripts (okay-ish step but a lot of babysitting that server and the logs)

- A mix of the previous one but using Ansible/Chef because let's add more abstraction why not

- Hire a couple of virtual assistants and walk them through the instructions, ask them to run the code and report back in case of errors (no joke)

- Install a tool on the server that exposes a user interface, provides a frontend for your tasks and does some random stuff behind the scenes. Of course you need to try to keep up with updates and security patches (that you now have to maintain too) and understand how it all works when you log back in to the server in 2 months

- Use some sort of SaaS, where most of them still require manual effort and understanding how they work, especially when you need to update the job (new dependency or new run context)

Now you suddenly come to the realisation that we somehow blew the simple requirement of "please run this thing every day, let me know if it there was an error during execution and let me update it every month" completely out of proportion. We didn't even discuss about "also tell me when the job somehow didn't run" otherwise we'd run out of yak to shave. My point here is that, to this day, automating simple things takes us on different tangents that are not really very robust.

I can already estimate the average agile planning poker results for this task and the smile of the scrum "masters" knowing they're going to make it through at least a dozen more sprints without too much emotional investment. You really can't, and shouldn't, expect developers to become sysadmins to run a scheduled task. The additional cognitive load is just too high for little to no benefit (you're just making more problems and increasing the potential failure modes of the whole thing).

Keep in mind that this is supposed to be the ABC's of automation but is so difficult to achieve, in 2022. Granted, localised automation (one you run on a one-off script on your local PC) is a bit simpler than distributed/delegated automation (something that has to run somewhere else) but it really shouldn't be like this. At this stage one would expect that besides from automating things, we could have easy ways of automating running automated things. But no.

Overall, it seems that you have to be familiar with some sort of Rube Goldberg equivalent tools to run generic code on a schedule. This is especially overwhelming for juniors that are just joining the field and are faced with challenges like this. So many options and so many different flavours of doing things. Something so simple yet so complicated that a toaster or microwave could solve granted an available electricity supply.

I can't help but thinking that by trying to simplify deployments and infrastructure we ended up automating and normalising yak shaving by creating more complexity and barriers of entry for something so ubiquitous and essential to our line of work: automation.

We're not talking about running something every day for 10 years, although it would be a fun challenge to solve, we're simply talking about a silly Python script that needs to run every day with some modicum of higher (but not far fetched) expectations. This makes me wonder now and then whether or not they shipped Kubernetes or Airflow in the space probes that are sent to distant celestial objects to run diagnostics on a schedule.

We can nowadays literally make molten sand (microprocessors) draw pictures (stable diffusion, dalle, ...) but have not yet come up with a simple and reliable way of running an evolving piece of code every now and then without involving arcane rituals and ruining a whole business week.

Either way, I'm extremely curious which of the above options you would think is the best and most efficient use of YOUR time or the canonical way of solving this problem? What are your thoughts on a pragmatic, yet professional way of solving this (without using the words "production", "highly available" or "fault tolerant") in a maximum of 30 minutes?

I'm certain there are things out there that solve this problem elegantly, if not, let's build it. This was not meant to be just a rant but an observation. :)

And finally, can you guess which of the above methods my special favourite is? ;)